First Part: Introduction to Parallel Computing

What is Parallel Computing??

(a) Serial Computing:

Traditionally,software has been written for serial computation:

(1) A problem is broken into a discrete series of instructions (or work units)

(2) Instruction are executed sequentially one after another

(3) Executed on a single processor

(4) Only one instruction may execute at any moment in time

(b) Parallel Computing

In the simplest sense,parallel computing is the simultaneous use of multiple compute resources to solve a computational problem:

(1) A problem is broken into discrete parts that can be solved concurrently

(2) Each part is further broken down to a series of instructions (or work units)

(3) Instructions from each part execute simultaneously on different processors

(4) An overall control/coordination mechanism is employed

The computational problem should be able to:

-Be broken apart into discrete pieces of work that can be solved simultaneously

-Execute multiple program instruction at any moment in time;

-Be solved in less time with multiple compute resources than with a single compute

resource

Besides,the compute resources are typically:

(1) A single computer with multiple processors/cores

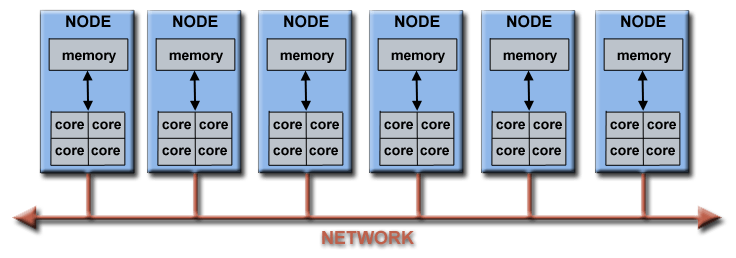

(2) An arbitrary number of such computers connected by a network

Parallel Computers:

-Virtually all stand-alone computer today are parallel from a hardware perspective:

(1) Multiple functional units

(2) Multiple execution units/cores

(3) Multiple hardware threads

-Networks connect multiple stand-alone computers(nodes) to make larger parallel computer clusters.

Extra Knowledge:

The majority of the world`s large parallel computers(supercomputers) are clusters of hardware produced by a handful of well known vendors.

What are meant by..............?

Serial

Running only a single task,as opposed to multitasking

Parallel

Computing involving the simultaneous performance of operations

Computer Clusters

Consists of a set of loosely connected or tightly connected computers that work together so that in many respects they can be viewed as a single system.

Sources From :https://computing.llnl.gov/tutorials/parallel_comp/

Next Part: Why Study Parallel Computing.........?

Traditionally,software has been written for serial computation:

(1) A problem is broken into a discrete series of instructions (or work units)

(2) Instruction are executed sequentially one after another

(3) Executed on a single processor

(4) Only one instruction may execute at any moment in time

|

| How Serial Computing works? |

(b) Parallel Computing

In the simplest sense,parallel computing is the simultaneous use of multiple compute resources to solve a computational problem:

(1) A problem is broken into discrete parts that can be solved concurrently

(2) Each part is further broken down to a series of instructions (or work units)

(3) Instructions from each part execute simultaneously on different processors

(4) An overall control/coordination mechanism is employed

|

| How Parallel Computing works? |

The computational problem should be able to:

-Be broken apart into discrete pieces of work that can be solved simultaneously

-Execute multiple program instruction at any moment in time;

-Be solved in less time with multiple compute resources than with a single compute

resource

Besides,the compute resources are typically:

(1) A single computer with multiple processors/cores

(2) An arbitrary number of such computers connected by a network

Parallel Computers:

-Virtually all stand-alone computer today are parallel from a hardware perspective:

(1) Multiple functional units

(2) Multiple execution units/cores

(3) Multiple hardware threads

-Networks connect multiple stand-alone computers(nodes) to make larger parallel computer clusters.

The majority of the world`s large parallel computers(supercomputers) are clusters of hardware produced by a handful of well known vendors.

|

| Sources from http://top500.org/ |

What are meant by..............?

Serial

Running only a single task,as opposed to multitasking

Parallel

Computing involving the simultaneous performance of operations

Computer Clusters

Consists of a set of loosely connected or tightly connected computers that work together so that in many respects they can be viewed as a single system.

Sources From :https://computing.llnl.gov/tutorials/parallel_comp/

Next Part: Why Study Parallel Computing.........?

Any disadvantage when using parallel computing?

ReplyDeleteThis comment has been removed by the author.

DeleteIn my opinion,everything has its advantage and disadvantage.Parallel computer faces latent poor performance.On a serial computer, the processor may wait as it moves data from RAM to cache, but it basically appears busy all the time and it is only after you do a more detailed analysis that you determine that it is actually performing poorly. On a parallel computer of 100 processors, most people quickly notice that a program is not running 100 times faster. The serial program may not have been very good but it is not generally as obvious as the fact that many of the parallel processors are often idle.

DeleteFor more information,please forward http://web.eecs.umich.edu/~qstout/parallel.html